These days, the use of database stored procedures is

regarded by many as a bad practice.

Those that dislike stored procedures tend to regard them as incompatible with

the three-tier architecture:

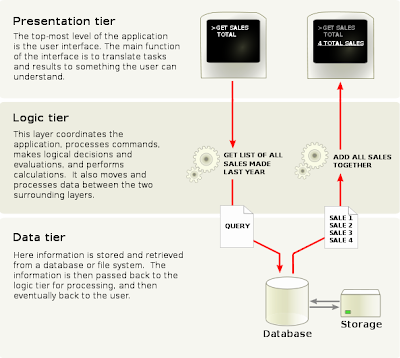

By breaking up an application into tiers, developers only have to modify or add a specific layer, rather than have to rewrite the entire application over. There should be a presentation tier, a business or data access tier, and a data tier.

This is illustrated as follows:

Note that the "tiers" in the figure should actually be labelled "layers", for as the accompanying Wikipedia article says:

The concepts of layer and tier are often used interchangeably. However, one fairly common point of view is that there is indeed a difference, and that a layer is a logical structuring mechanism for the elements that make up the software solution, while a tier is a physical structuring mechanism for the system infrastructure.

In fact, those that argue that stored procedures are bad tend to equate the three logical layers with three physical tiers:

- Data layer = data tier (database)

- Logic layer = middle tier (application server)

- Presentation layer = presentation tier

But if we accept the above definition of "layers" and "tiers", it is obvious that the following is a valid mapping as well:

- Data layer = data tier (database)

- Logic layer = data tier (database)

- Presentation layer = presentation tier

In other words, the database becomes our "logic layer" through the use of database stored procedures, which, as the name implies, are physically stored (and executed) in the database. (And although I use the term "stored procedure", I'm primarily talking about Oracle and PL/SQL, where the PL/SQL code should be put in packages rather than stand-alone procedures.)

But why is this a bad idea? In fact, as it turns out, it might not be a bad idea at all. The usual reasons given against the use of stored procedures for "business logic" (or for anything at all, really) tend to be myths (or outright lies), repeated so many times that they are taken as the truth.

So let's bust these myths, once and for all. And whenever someone argues against stored procedures using one of these myths, just give them a link to this blog post. (And leave comments to prove me wrong, if you will.)

Myth #1: Stored procedures can't be version controlled

Stored procedure code lives in text files, which can be version controlled like any other piece of code or document. Storing/compiling the code in the database is just like (re-)deploying any other code.

Claiming that stored procedures cannot be version controlled (because they are in the database) is like saying your application source code (Java, C# or whatever) cannot be version controlled because it is compiled and deployed to an application server.

Myth #2: Managing the impact of changes in the database is hard

Databases such as Oracle have built-in fine-grained dependency tracking.

A wealth of information about your code is exposed via data dictionary views.

Myth #3: Database tools lack modern IDE features

There are a number of free and commercial PL/SQL code editors and IDEs, and all have various levels of syntax highlighting, code insight and refactoring support.

Myth #4: Stored procedures always result in spaghetti code

To this, I can only say that bad programmers can make pasta in any language (the above is

a visual representation of a Java or .NET enterprise framework "several dozen megabytes chock full of helper classes like IEnterpriseAuthenticationProviderFactoryManagementFactory").

And a good programmer can create "beautiful" code in COBOL, Visual Basic, PHP... and any stored procedure language, for that matter.

Myth #5: Code in the database can’t be properly encapsulated and reused, you need an object-oriented language for that

PL/SQL packages, views, pipelined functions and Ref Cursors offer encapsulation and reuse. And PL/SQL has object-oriented features, too.

Myth #6: Stored procedure languages are primitive, they lack basic features such as exception handling and dynamic execution

PL/SQL has had proper exception handling from the start, over 20 years ago (although exception handling was only introduced to SQL Server in 2005).

DBMS_SQL, EXECUTE IMMEDIATE and "weak" Ref Cursors enable dynamic execution of code. Parameter overloading and the ANYDATA and ANYTYPE types allow for generic code to be written.

Myth #7: Debugging stored procedures is hard/impossible

Both Oracle and SQL Server have built-in debugging capabilities, exposed via graphical user interfaces in the common IDEs, with full support for stepping though code, inspecting values, etc.

Myth #8: Stored procedures can't be unit tested

There are a number of free and commercial unit testing frameworks available for PL/SQL. Steven Feuerstein, one of the world's leading experts on the Oracle PL/SQL language, has been

preaching the importance of unit testing in the database for years, and has developed several of the available unit testing frameworks.

Myth #9: Stored procedures are not portable, and tie you to one platform

This is the "vendor lock-in" argument. But the fact is that

PL/SQL runs on multiple databases.

Such as

DB2:

"IBM DB2 9.7 for Linux, UNIX, and Windows has out-of-the-box support for Oracle's SQL and PL/SQL dialects. This allows many applications written against Oracle to execute against DB2 virtually unchanged."

And Postgres (EnterpriseDB):

"Postgres Plus Advanced Server implements a comprehensive suite of Oracle-compatible functionality within and around the core PostgreSQL engine, including: (...) Oracle SQL syntax and semantics, Functions and Packages, PL/SQL (extensive support)"

Add to this the fact that the Oracle database runs on more operating systems than any other database, which means that your PL/SQL code will seamlessly transfer from Windows to Unix to Linux-based systems.

So PL/SQL-based code can actually be said to be

more portable than, for example, .NET code (despite the existence of Mono). There are very few truly portable technologies; even Java is "

write once, debug everywhere".

Myth #10: It's stupid/dangerous to put business logic in the database

This claim is usually made without any specific reason as to why it is stupid or dangerous. It usually "

just is", because it is "

against best practice" and "

everybody else is putting the business logic in the middle tier". Sometimes it is claimed that putting logic in the database "

mixes concerns", which must be a bad thing.

The problem with

"business logic" is that nobody has a clear definition of what it is (but "

you'll know it when you see it"). For example, where do you draw the line between "data logic" and "business logic"? Primary keys, foreign key constraints, unique key constraints, not null constraints, check constraints -- are these "data logic" or "business logic"? "Discount must be between 0% and 5%", is that a business rule or a data constraint, and/or is it a validation rule in the presentation layer?

The fact is, if you move

ALL your logic into stored procedures, you entirely avoid the "mixing of concerns" between the data tier and the logic tier. (And if you think such an approach dooms your project to failure, consider the next myth, which features an example of a massive [and wildly successful] application written entirely in the database.)

Oh, and by the way, if your business logic is somewhere else than in the database, you always run the risk of someone or something bypassing your middle tier (for example by logging in with SQL*Plus), directly updating the database and possibly corrupting the data.

So let's turn this around and conclude instead that:

"If your business logic is not in the database, it is only a recommendation."

Myth #11: Stored procedures can't scale

A frequent argument against stored procedures is that by placing all the work in the database server, your solution won't be able to scale up, because you need "application servers" in the middle tier to do that. The scalability of the database is limited by the fact that you can only have a single database server (or you need to rewrite your code to work with partitioned/sharded databases like Facebook have done).

Of course, a lot of the people who throw around this kind of argument have never worked on an application or website which needed to scale up to millions of users (and to be clear, neither have I). That's because the vast majority of us work on much smaller enterprise business systems or "normal" websites (perhaps even the kind of website that can be well served with

free database software on a server with less juice than your laptop).

But stored procedures CAN scale. It's only a matter of money. And if you have millions of users, you should be able to afford decent hardware.

Let's use Oracle Application Express (Apex) as an example of

a big and complex PL/SQL application:

"[Application Express] lives completely within your Oracle database. It is comprised of nothing more than data in tables and large amounts of PL/SQL code. The essence of Oracle Application Express is approximately 425 tables and 230 PL/SQL packages containing 425,000+ lines of code."

This PL/SQL application can be deployed anywhere from your laptop to any kind of server:

The biggest and fastest server you can buy is currently

Oracle Exadata.

"An 8 rack configuration has a raw disk capacity of 3,360 TB and 1,680 CPU cores for SQL processing. Larger configurations can be built with additional InfiniBand switches."

Oracle makes

bold claims about this machine:

"Oracle claims that (..) two Exadata database systems would be able to handle Facebook’s entire computing load."

It's hard for me to verify that claim, not being associated with neither Oracle nor Facebook, but let's assume it has at least some truth to it.

So what about

running our "stored procedure" application on Exadata?

"Does APEX Work on Exadata?

"Yep, Exadata is just Oracle. Let me say that again: It’s just Oracle. Exadata runs an 11.2 Database on Linux x64. It’s the exact same binary install if you download those binaries for “generic” Linux x64 11.2 from OTN. So, if your code / app runs on 11.2, it runs on Exadata. (..) The APEX Dev Team (my old friends and colleagues) did absolutely nothing to port their code to Exadata. I've run a number of customer benchmarks with customer's data and queries and have yet to make a single change to their queries or structures to make them work on Exadata."

So... without changing a single of those 425,000 lines of code, this "stored procedure" application can run on my old laptop (I've even tried it on an Asus EEE netbook), or it can run with 1,680 CPU cores. Without offloading any logic to an application server.

I'd say that's pretty scalable.